Perhaps the greatest technological achievement in industrial and organizational (I–O) psychology over the past 100 years is the development of decision aids (e.g., paper-and-pencil tests, structured interviews, mechanical combination of predictors) that substantially reduce error in the prediction of employee performance (Schmidt & Hunter, 1998). Arguably, the greatest failure of I–O psychology has been the inability to convince employers to use them.

Empirical studies have shown for decades that General Mental Ability (GMA) is the best predictor of job performance suitable for common use. Unlike other high performing measures, GMA can be readily established through standard testing. Certain personality effects such as conscientiousness also have substantial correlations with job performance and can provide useful additional information to recruiters evaluating candidates. Instead, there is a stubborn reliance on intuitive methods such as unstructured interviews which have much poorer accuracy. In fact, new research shows that including unstructured interviews can actually drive overconfidence in recruiters while impeding accuracy if used in combination with more precise methods such as GMA testing.

The following table from the meta-analysis performed by Schmidt & Hunter (1998) provides a fair starting point.

| Personnel Measure | Validity (r) |

| GMA Tests | 0.51 |

| Work Sample Tests | 0.54 |

| Integrity Tests | 0.41 |

| Conscientiousness Tests | 0.31 |

| Structured Employment Interviews | 0.51 |

| Unstructured Employment Interviews | 0.38 |

| Job Knowledge Tests | 0.48 |

| Job Tryout Procedure | 0.44 |

| Peer Ratings | 0.49 |

| T & E Behavioural Consistency Method | 0.45 |

| Reference Checks | 0.26 |

| Job Experience (Years) | 0.18 |

| Biographical Data Measures | 0.35 |

| Assessment Centers | 0.37 |

| T & E Point Method | 0.11 |

| Years Of Education | 0.1 |

| Interests | 0.1 |

| Graphology | 0.02 |

| Age | -0.01 |

Work sample tests, Job knowledge tests and Job tryout procedures all require substantial inputs of time by the candidate or organisation, and so are generally not viable. Similarly, peer ratings have limited viability when recruiting an outside candidate. This leaves GMA tests, structured interviews and the behavioural consistency method as the standout single methods. GMA tests have high validity and low application costs as well as being well studied (per Schmidt & Hunter, “literally thousands of studies have been conducted”). When interviews are structured to ensure that each candidate receives the same questions and better comparisons can be made between them, these can be quite a strong predictor, though the depth of investigation in scientific literature is not at the same level as GMA. It should be noted that the APS typically uses these kinds of structured interviews, so at least someone is listening to the academics. Finally, the behavioural consistency method is a way of extrapolating from a candidate’s history of achievement. It is notable that this performs substantially better than a raw years of job experience rating, but there has been little recent literature so I have been unable to examine it in more detail. Unstructured interviews have some predictive power on their own, but are substantially worse than those with structure. While integrity and conscientiousness tests are not hugely accurate on their own, their data is nicely orthogonal to that established by GMA tests and so they prove quite effective when used in conjunction.

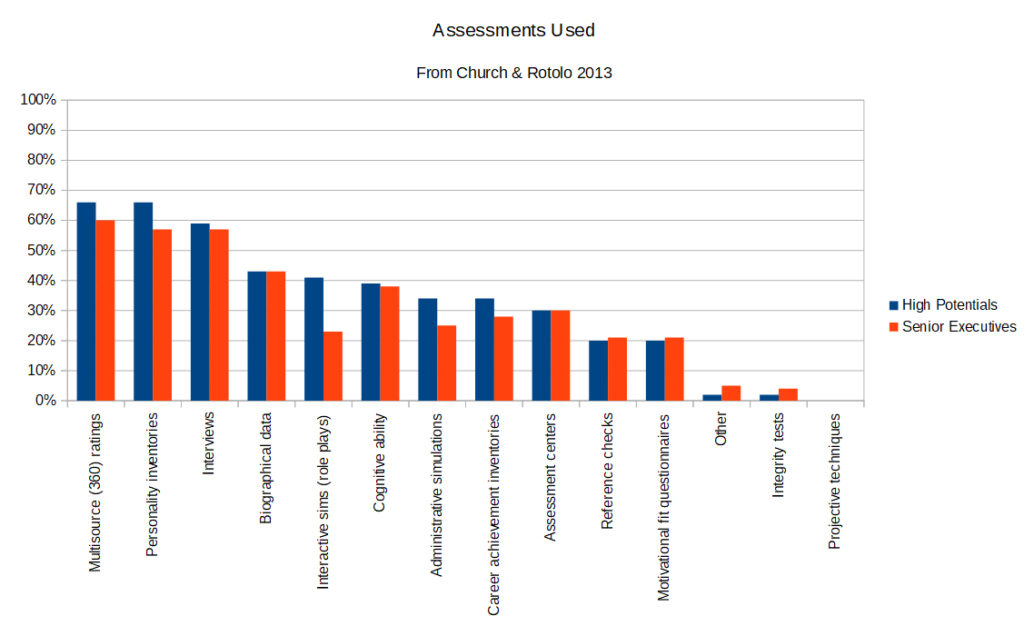

So that’s a rough state of the art, there has been some further examination since Schmidt & Hunter’s meta-analysis, but this is close enough for our purposes. Data on what is actually used in industry is more limited, so I’ll mention my utterly unscientific anecdote that private industry recruiters have never asked any more from me than unstructured interviews. When it comes to actual empirical data, we do have Church and Rotolo as a recent source on assessments of senior executives and those marked as high potential. Note the caveat that they did not select companies by any kind of scientific method, instead subjectively choosing those they judged “the best of the best”.

This indicates that cognitive ability (GMA) is substantially underutilised, even in the best of the best. No indication of the structured-ness of interviews was made by the authors, so it would be fair to assume these are the more common unstrucred kind, and thus substantially over-represented. It would be great if we had more data on the typical use of these tools in industry, particularly in the Australian case (which I suspect would be five or ten years behind the US case). But we don’t, so we’ll have to make some extrapolations.

Rynes et al studied the beliefs of experienced HR professionals about practices which have proven effective in research in 2002, so we can draw some conclusions about general pratice from that. Only 16% of respondents correctly identified that screening for performance is a better predictor than values. Only 18% correctly identified that intelligence is a better predictor of performance than conscientiousness. 32% identified that integrity tests are valid despite the risks of applicant distortion. 42% identified that bering intelligent is an advantage even in a low skilled job. Disturbingly given the relatively widespread use of personality tests, only 42% identified that there is a difference in how effective different personality measurement systems are at predicting performance. Similarly, 51% believed that the Myers-Briggs Type Indicator was the system justified by research (the Big Five being the actual valid system).

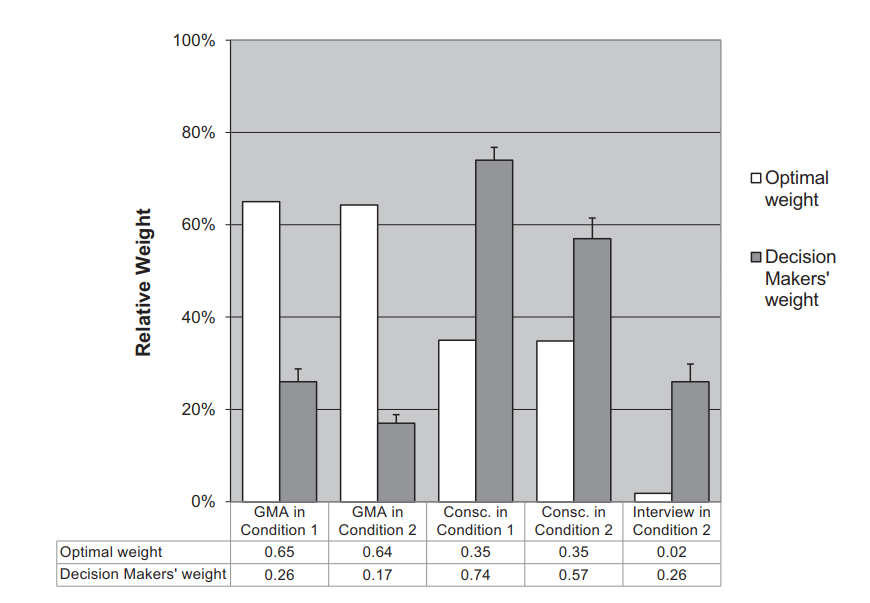

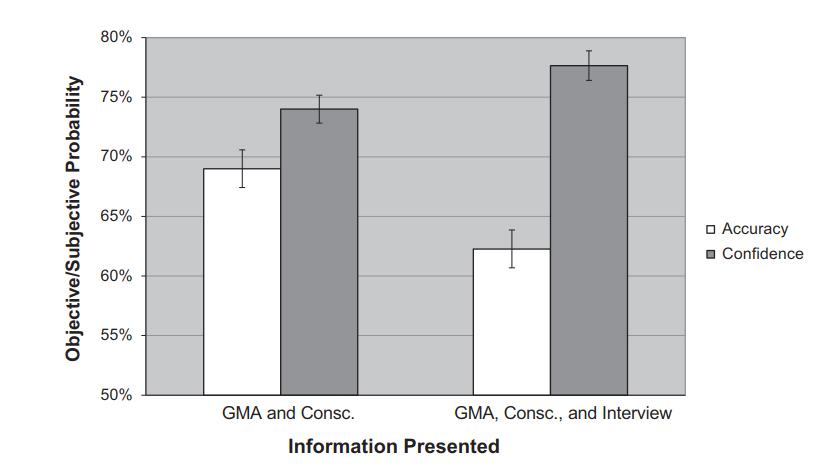

Kausel, Culbertson and Madrid’s 2016 paper provides some additional information on how hiring professionals weight factors, and also provides more up to date research on how different methods interact. Kausel et al set out to test the findings of Dana et al using HR professionals, that unstructured interviews actually impede decision making when combined with more valid methods of decision making. Recruiters would have to decide between two candidates after being provided with results of GMA and conscientiousness tests. In one case, this would be all they were provided with. In case two however, they would also have the results of an interview. Instinctively you would think that having more information would allow the case two recruiters to make better decisions. But in practice, this additional information actually impeded the decision making process via the dilution effect, as the recruiters placed more emphasis on this less accurate information, and this was the result:

So how did these decision makers miss the mark so significantly?

They heavily overweighted the interview and conscientiousness test, at the expense of the GMA test. This is similar to what we saw the top companies do in Church’s survey above. So personality related measures are consistantly overrated in comparison to talent and intelligence measures. It is also worth noting that these experienced professionals were handily beaten by regression-based predictions in both cases.

Dana, Dawes and Peterson’s 2013 study provides some more data on this effect of overrating interviews, though unfortunately with students rather than professionals. This study ingeniously had candidates respond either accurately or randomly to interview questions. Not only did none of the interviwers realise this, they actually believed they were able to infer more information about the candidate when the candidate responded randomly. They also tested both with and without an interview, and regardless of the interview being random or accurate, better estimations of performance were achieved by those who did not conduct an interview and instead relied upon the candidate’s past performance. Yet in a later study students were asked about the validity of different interview types (natural, accurate, random and no interview) and 57% preferred to have an interview that they knew had random answers than no interview at all! This shows how cultural embedded the idea of recruiting based on interviews is, and thus how substantial a cultural change would be required in order to adobt more accurate hiring methods.

Thus, while interviews do not help predict one’s GPA, and may be harmful, our participants believe that any interview is better than no interview, even in the presence of excellent biographical information like prior GPA.

Despite the empirical evidence, most private sector recruiting relies heavily on unstructured interviews, with actually scientifically proven methods underused, if used at all. This ties into my earlier investigation into confidence, with interviews naturally selecting for those who display the cues associated with competence, which unfortunately are the overconfident rather than those with actual competence. Wider use of test based methods could act to counter this bias by more readily evaluating candidates actual competence.